ChatGPT's new version disappoints and halts the dream of the promised superhuman AI.

As if it were Steve Jobs announcing the iPhone in 2007, Sam Altman yesterday presented the new apple of his eye: GPT-5, the latest update to ChatGPT . Broadly speaking, it's a smarter, faster, and more reasoning version than its predecessors. However, that's just the point. This isn't the artificial general intelligence (AGI) Altman is pursuing, nor is it even superior to, say, the best programmer on Earth.

This has disappointed all AI experts who, eagerly awaiting last week's announcements, saw Altman generate hype by tweeting things like a Death Star, implying that GPT-5 was going to "annihilate" all other AI systems on the market. This hasn't been the case, and it was also reminiscent of the fiasco that was the launch of Apple Intelligence , compared to the promises made in its announcement during WWDC 2024.

Matt Shumer, AI expert and owner of OthersideAI, was able to access GPT-5 a few weeks ago and explains to ABC that, while it is the best overall model so far, "you have to put in a lot of effort to get the most out of it," something that the average user is not capable of doing at the moment, so they will not notice much difference with the GPT-4.5 they have used until now.

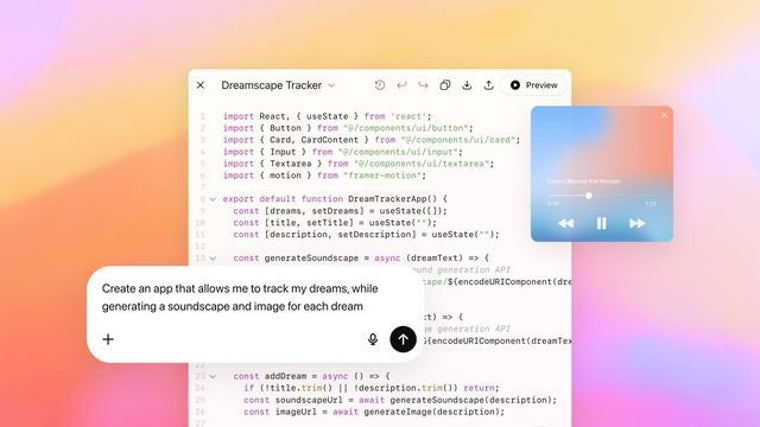

"GPT-5 is very detail-oriented, focused on being best in long contexts, because it makes fewer stupid mistakes there. We've found it to be ideal, if not the best, AI for programming," Shumer notes. In fact, Altman noted during the new model's presentation that it was the "perfect assistant for a programmer."

According to the company, GPT-5 achieves a 74.9% success rate on the first attempt.

Openai

According to the company, GPT-5 achieves a 74.9% success rate on the first attempt.

Openai

In the SWE-bench Verified benchmark, which focuses on real-world GitHub tasks, GPT-5 achieves a 74.9% success rate on the first try. No alternative has ever achieved this level. According to the company led by Sam Altman, it outperforms Claude Opus 4.1 (74.5%) and Gemini 2.5 Pro (59.6%). It can create websites from scratch with just a few instructions, design applications, interactive games, or debug code in large repositories without losing precision. It not only executes, it also explains what it does and why.

On the other hand, GPT-5 doesn't just improve on the inside: it also changes the way we interact with it. With its arrival, ChatGPT incorporates four new personalities: Cynic, Robot, Listener, and Nerd. Each responds with its own style, adjusting tone, attitude, and approach without the need for instructions. It also allows you to change the color of the chat you have with the AI, although this option is only available to users with paid accounts. Everything indicates that OpenAI's relationship with Jony Ive, former Apple designer, has influenced GPT-5's new features.

However, it seems that GPT-o3 remains the best option for scientific research, while GPT-4.5 is the ideal model for writing. The good news is that, as of yesterday, GPT-5 has been rolled out for free to all ChatGPT users starting today. Free users will have access to both GPT-5 and a faster but less accurate version, GPT-5 mini, marking the first time an advanced reasoning model has been available without a subscription.

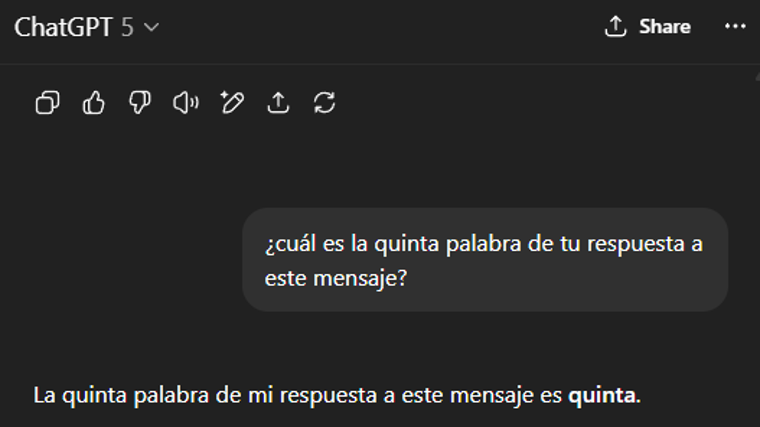

While there are reasons to be excited about GPT-5 (the model abandons version subdivision to become a single model that decides in real time whether to prioritize reasoning or speed), the leap is not as promised. Two of the new model's first testers told Reuters that they were also impressed with its ability to program and solve science and math problems, but they believe the leap from GPT-4 to GPT-5 is not as great as that from GPT-3 to GPT-4. "It's far from that idea of near-human intelligence that some inside and outside of OpenAI have been hinting at for years," they emphasize. At ABC, we've run a couple of tests, and as experts point out, it falters when asked very basic questions, such as whether GPT-5 already exists or what the fifth word in its answer is.

ChatGPT continues to fail at the most basic things, which are critical for the average AI user.

ABC

ChatGPT continues to fail at the most basic things, which are critical for the average AI user.

ABC

The development of GPT-5 hasn't been easy either. Altman publicly acknowledged that the launch had to be delayed for several months because they couldn't integrate all the model's components. He also said they wanted to ensure they had enough capacity for what they anticipated would be "unprecedented" demand. But there was more to those delays. On the one hand, OpenAI faced a data problem: there are no longer many new, large, and clean sources to train models of this type.

Ilya Sutskever, the company's former chief scientist, explained it this way: "We can scale in power, but not in data." Another problem was that "training runs" for large models are more prone to hardware-induced glitches given the complexity of the system, and researchers might not know the final performance of the models until the end of the run, which can take months.

ABC.es